Luca Zanella

PhD Student,

University of Trento

Trento, Italy

Hi! I’m Luca, a PhD student in the Multimedia and Human Understanding Group at the University of Trento, where I’m advised by Elisa Ricci and co-advised by Massimiliano Mancini. I’m also a Student Researcher at Google Zurich, working in Federico Tombari’s group within the team led by Alessio Tonioni. My research focuses on vision-language models for video understanding, with applications ranging from step grounding in instructional videos to video-text alignment evaluation and anomaly detection in surveillance footage.

Contact: luca [dot] zanella-3 [at] unitn [dot] it

news

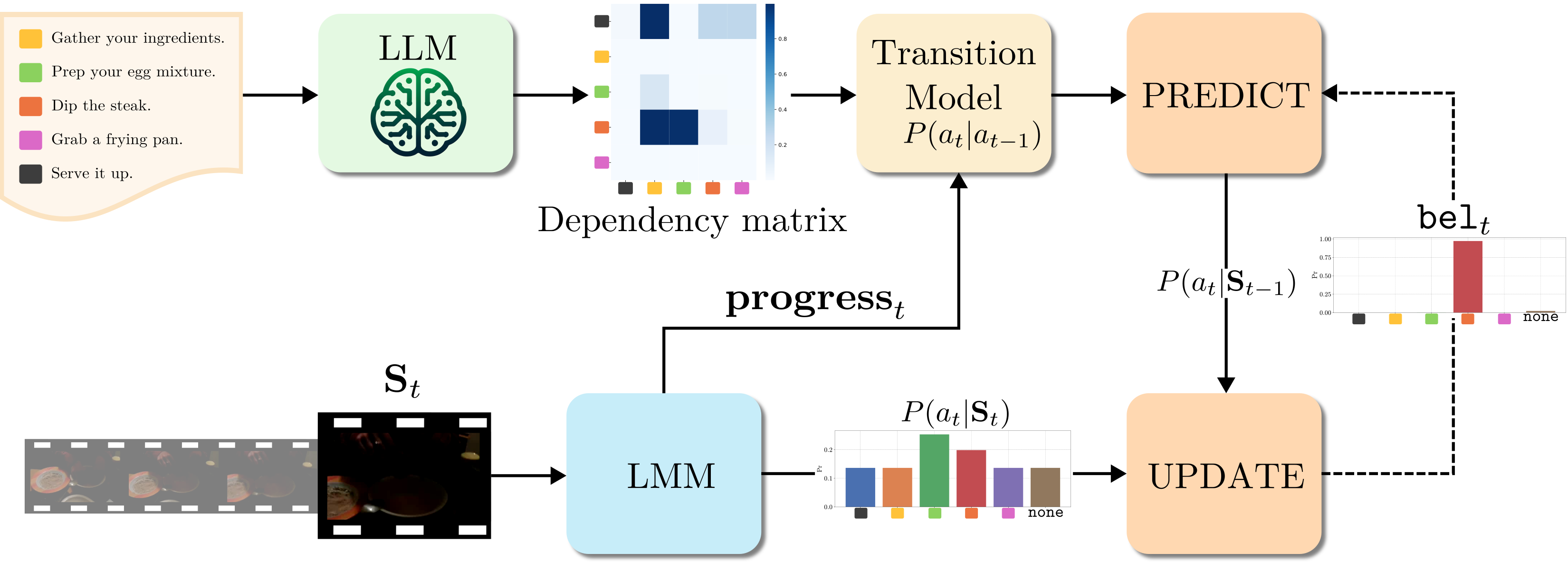

| Sep 18, 2025 | Our paper “Training-free Online Video Step Grounding” is accepted to NeurIPS 2025! |

|---|---|

| Aug 18, 2025 | I have joined Google Zurich as a Student Researcher on the team led by Alessio Tonioni! |

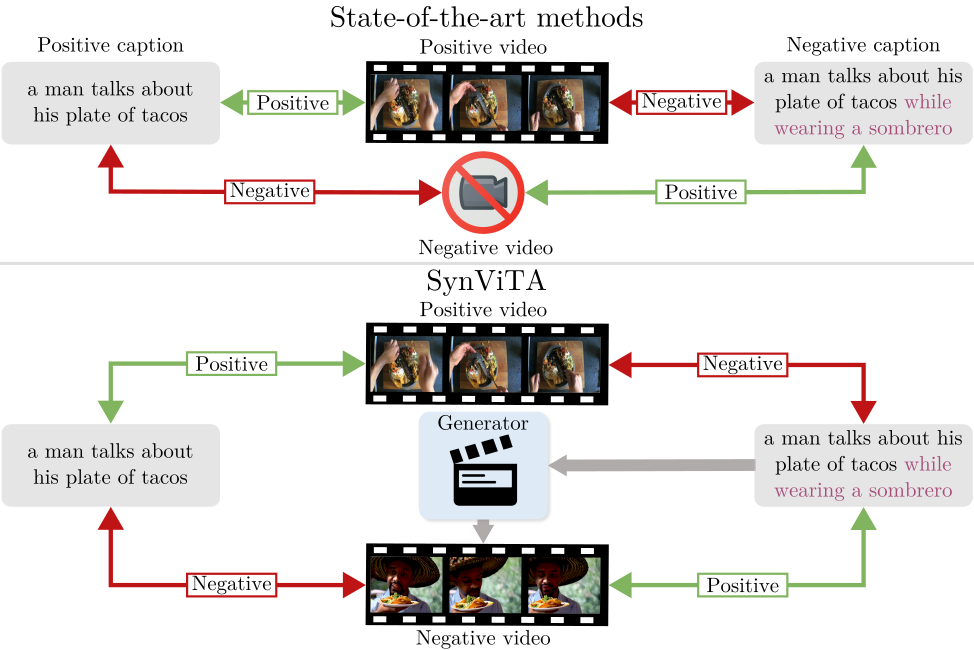

| Feb 26, 2025 | Our paper “Can Text-to-Video Generation help Video-Language Alignment?” is accepted to CVPR 2025! Also honored to be among the outstanding reviewers. |

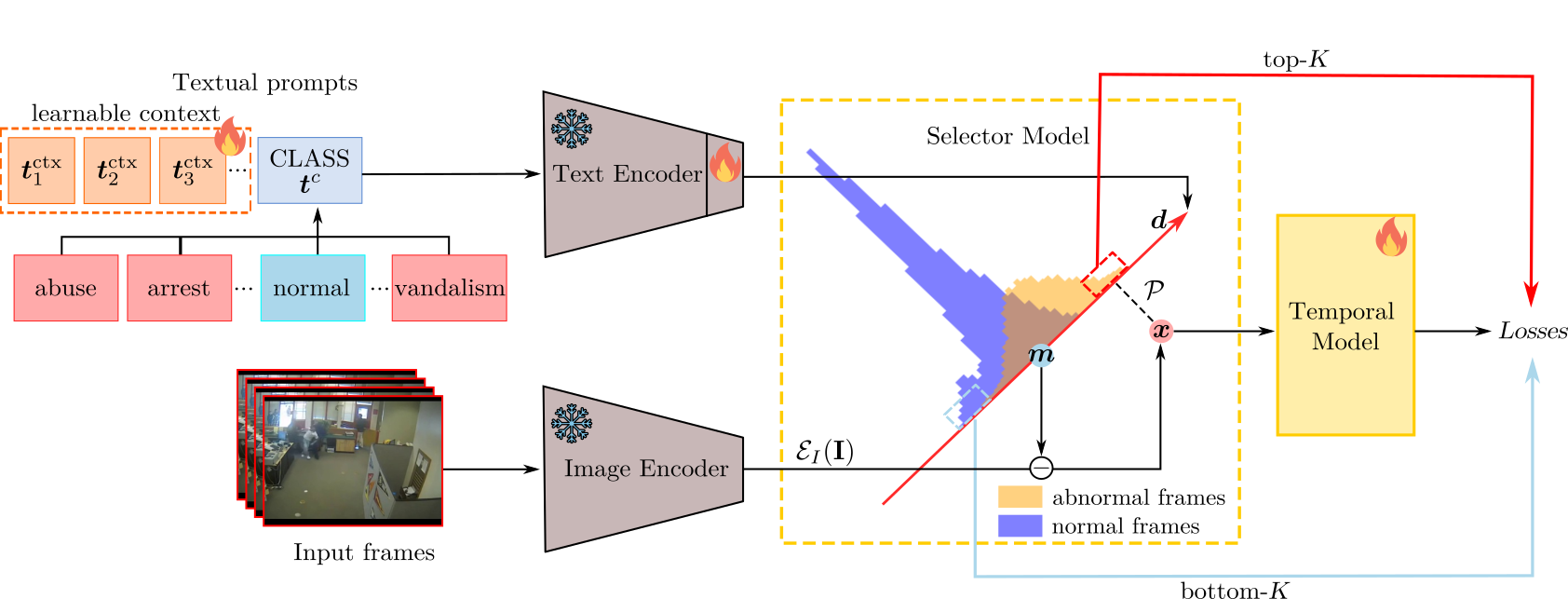

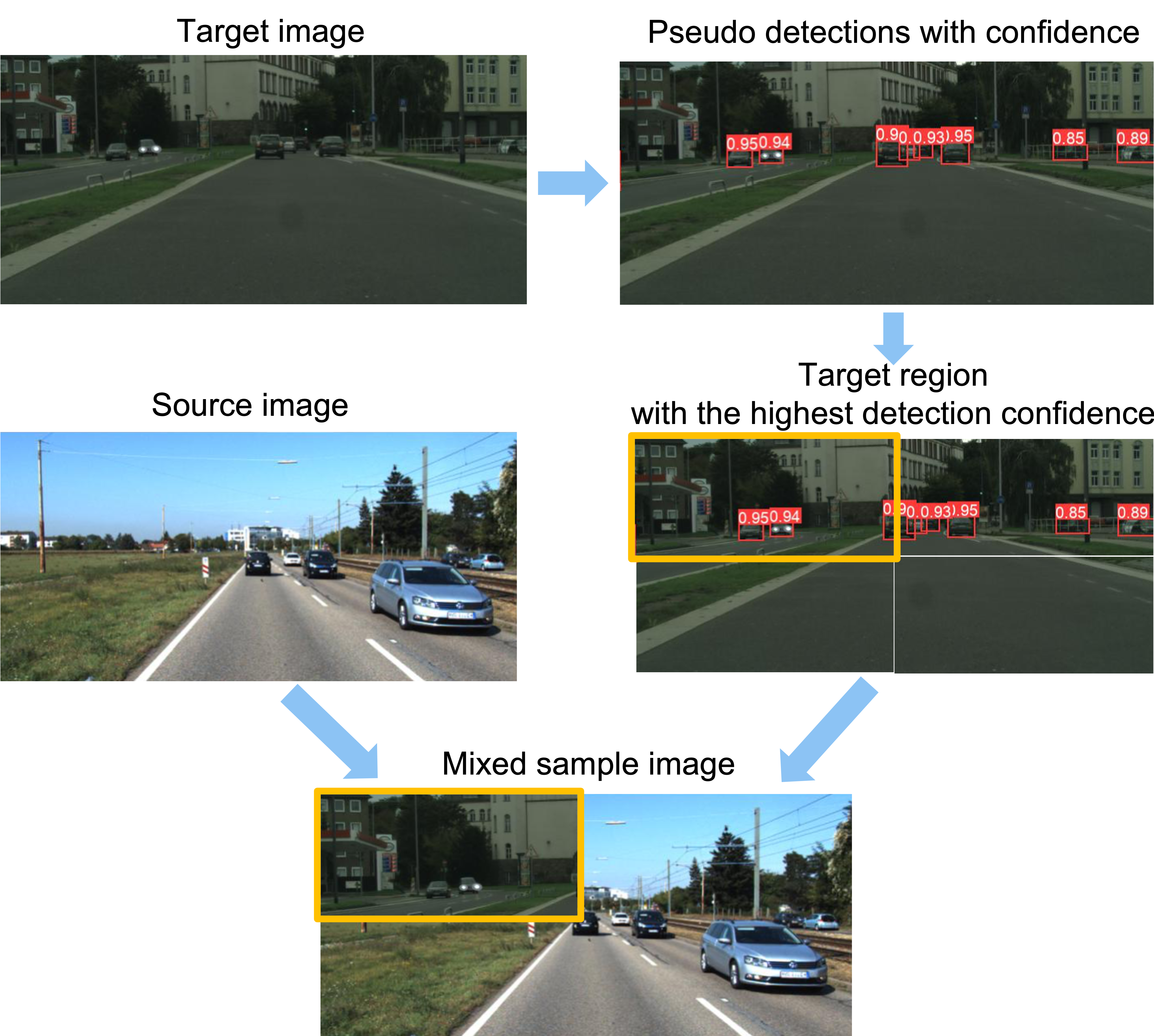

| Sep 04, 2024 | Our paper “Delving into clip latent space for video anomaly recognition” is accepted to CVIU! |

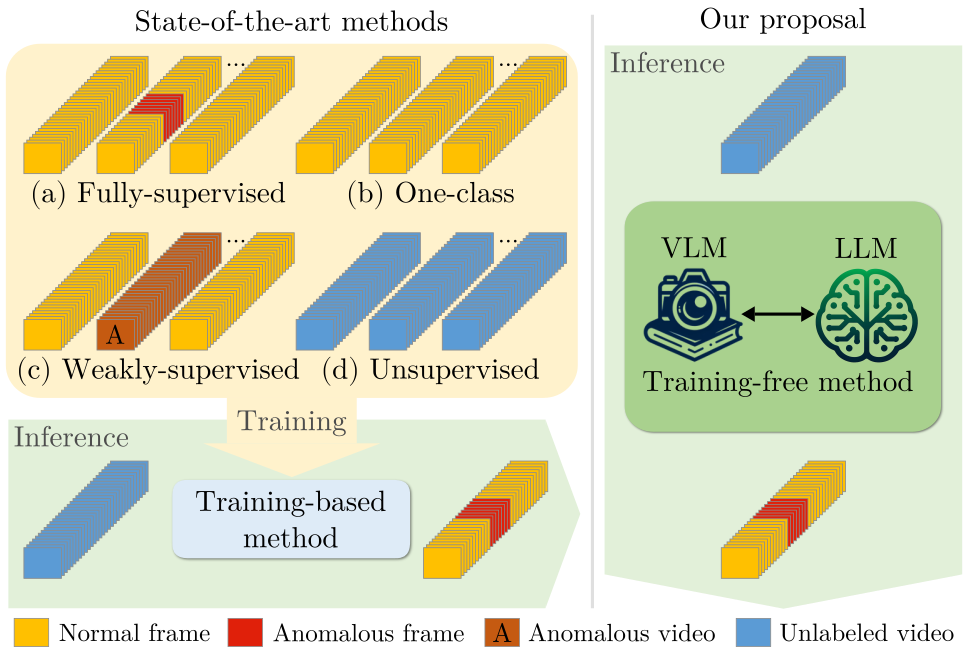

| Feb 27, 2024 | Our paper “Harnessing large language models for training-free video anomaly detection” is accepted to CVPR 2024! |